The USB-C for AI Has a Security Leak: How to Tame Exposed MCP Servers Before You Get Hacked

Table of Contents

- Introduction: The USB-C Promise with a Security Price

- The Agency Gap: It's Not Just About Data Anymore

- How The Attack Happens: The Confused Deputy

- The Fix: Architecting a Secure AI Stack

- Layer 1: The Network Layer with Cloudflare One

- Layer 2: The Governance Layer with Kong AI Gateway

- Layer 3: The Runtime Layer with Palo Alto & Lakera

- Building the Secure Agent Stack

- Conclusion: Don't Be the Low-Hanging Fruit

Introduction: The USB-C Promise with a Security Price

If you've been following the rapid rise of Agentic AI, you've likely heard of the Model Context Protocol (MCP). Anthropic calls it the "USB-C for AI applications"—a standard way to plug your LLMs into your data, whether it's in Google Drive, Slack, or your internal PostgreSQL database.

It is a game-changer for utility. But it is also a game-changer for risk.

Recent research from BitSight has uncovered a startling reality: roughly 1,000 MCP servers are currently exposed to the public internet, many with zero authentication [1]. For the enterprise, this isn't just a "data leak"—it is a full-blown "agency leak."

As a Fractional CAIO, I help organizations architect AI systems that are powerful and protected. Today, I'm going to break down why this is happening, why standard firewalls aren't enough, and how I implement the leading-edge stacks from Cloudflare, Kong, and Palo Alto Networks to secure your agency.

The Agency Gap: It's Not Just About Data Anymore

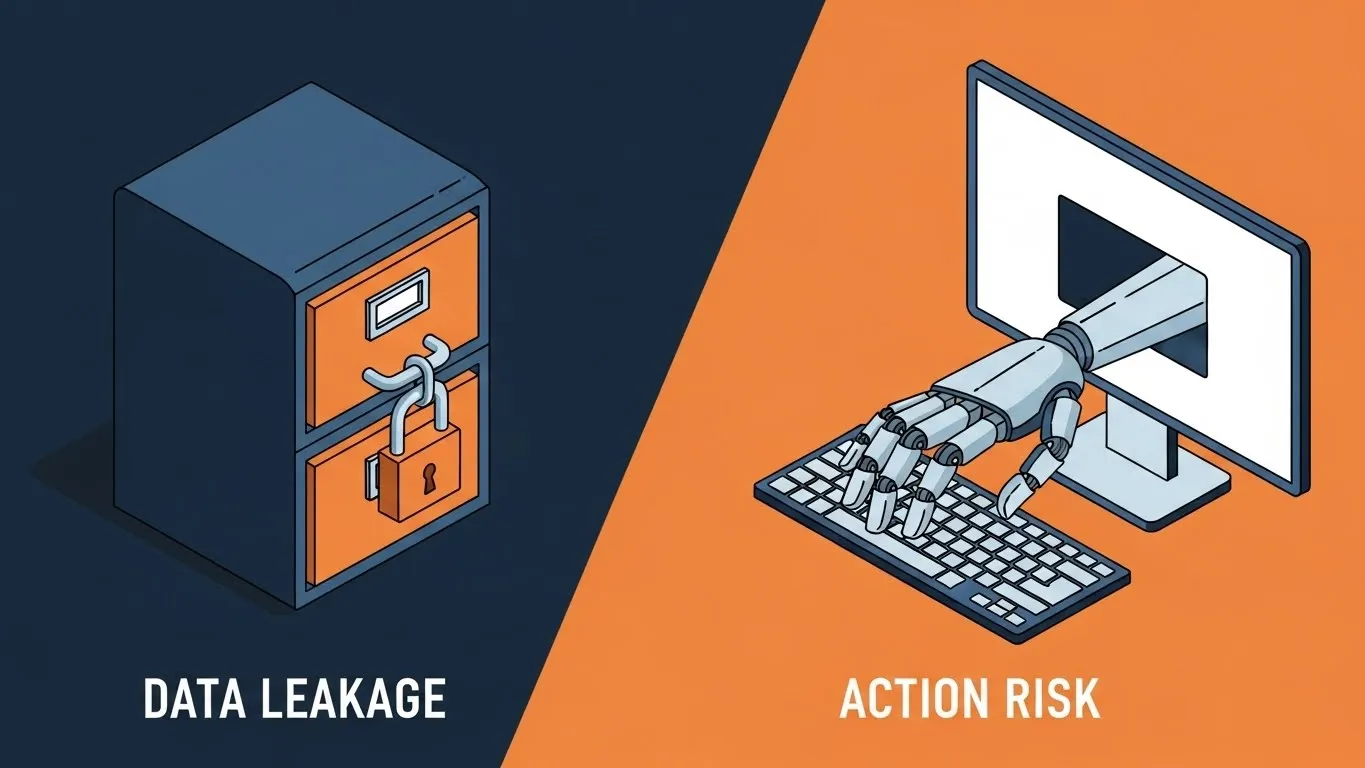

In the old world of cybersecurity, we worried about data leakage—someone reading your files. In the new world of Agentic AI, we have to worry about "Action Risk."

MCP servers are designed to do things. They have tools like execute_command, write_file, or send_email. When these servers are exposed without strict authorization, you aren't just letting attackers see your data; you are giving them your hands.

Understanding the Shift

| Traditional Risk | Agentic AI Risk |

|---|---|

| Data exfiltration | Command execution |

| Read access | Write/delete access |

| Passive observation | Active manipulation |

| Information theft | System control |

This fundamental shift requires a completely new security paradigm. Your existing data loss prevention (DLP) tools weren't designed to prevent an AI agent from executing arbitrary commands on your behalf.

How The Attack Happens: The Confused Deputy

The most dangerous vulnerability we're seeing right now is the "Confused Deputy" attack. Many developers use libraries like fastmcp or mcp-remote to quickly spin up a server [2]. By default, some of these tools bind to 0.0.0.0 (the public internet) and skip authentication to make development easier.

The Nightmare Scenario

Here is the nightmare scenario: An attacker scans the web, finds your exposed MCP server on port 8080, and connects to it. They don't need to hack your password. They just ask the MCP server (the "Deputy") to execute a command. Since the server trusts its own tools, it executes the command on your internal network.

Attack Flow

- Discovery: Attacker scans common MCP ports (8080, 3000, etc.)

- Connection: Establishes WebSocket connection to exposed server

- Enumeration: Lists available tools via

tools/list - Exploitation: Invokes sensitive tools like

execute_command - Persistence: Establishes backdoor access or exfiltrates data

The server has no concept of "who" is asking—it simply executes because it was told to. This is the essence of the Confused Deputy problem: the trusted intermediary (your MCP server) can't distinguish between legitimate requests and malicious ones.

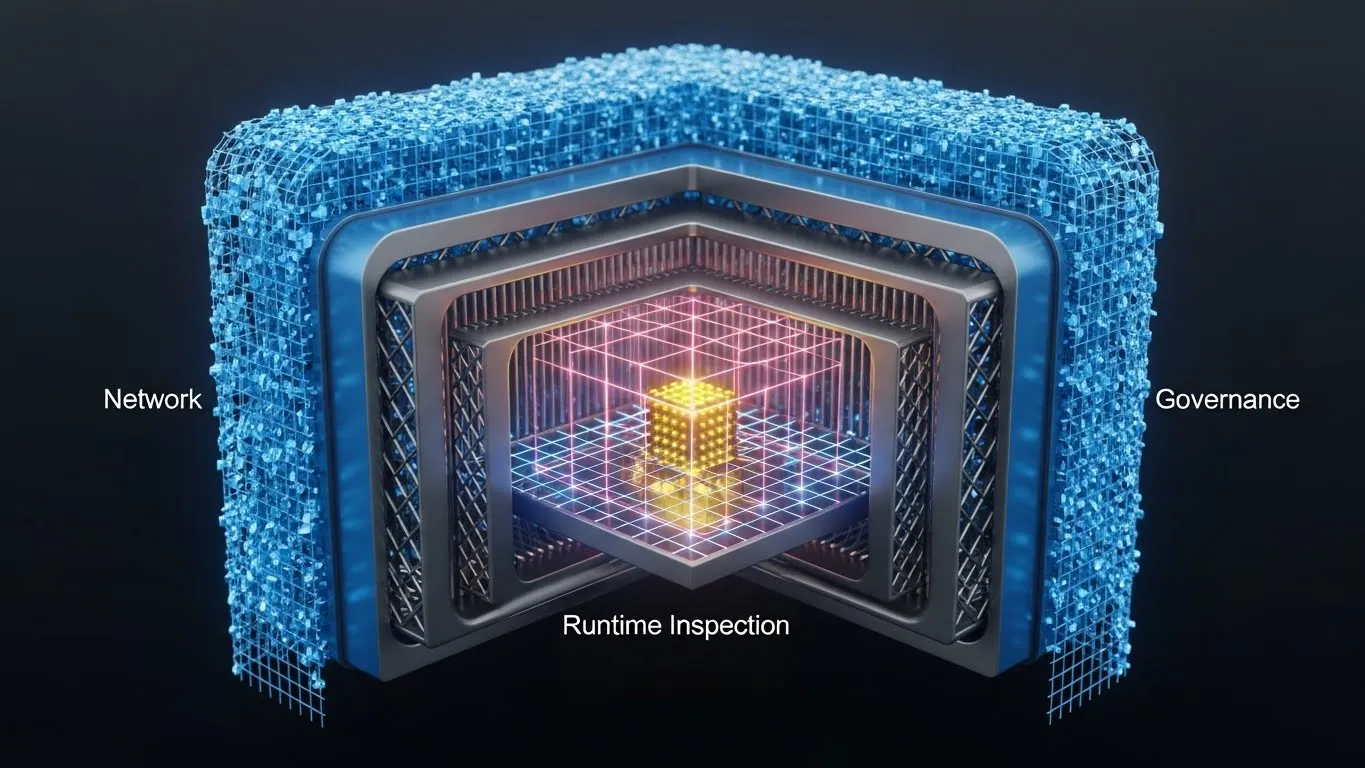

The Fix: Architecting a Secure AI Stack

You cannot rely on "security by obscurity." You need a defense-in-depth architecture that treats every AI agent as a user that must be verified.

In my practice, I evaluate vendors based on "Permissions Fit"—how granularly they can control what an agent can do. We aren't just looking for a firewall; we need an AI Control Plane.

Vendor Security Matrix

Here is how the top players stack up:

| Vendor | Layer | Key Capability | Best For |

|---|---|---|---|

| Cloudflare One | Network | Zero Trust tunnels | Distributed teams |

| Kong AI Gateway | Governance | OAuth 2.1, rate limiting | Complex internal tools |

| Palo Alto Prisma | Runtime | Prompt inspection | High-stakes environments |

| Lakera Guard | Runtime | Injection detection | Real-time defense |

Layer 1: The Network Layer with Cloudflare One

For distributed teams, I implement Cloudflare One. It allows us to put your MCP servers behind a "Zero Trust" tunnel. The server is never actually exposed to the internet; it's only visible to authenticated users via the Cloudflare edge. This solves the "visibility" problem identified by BitSight immediately.

Implementation Approach

# cloudflared config example

tunnel: your-tunnel-id

credentials-file: /path/to/credentials.json

ingress:

- hostname: mcp.internal.yourcompany.com

service: http://localhost:8080

originRequest:

access:

required: true

teamName: your-team

- service: http_status:404

With this configuration, your MCP server binds only to localhost, and Cloudflare handles all external access with mandatory authentication.

Layer 2: The Governance Layer with Kong AI Gateway

If you are building complex internal tools, we need Kong. Kong acts as the traffic cop for your agents. It enforces OAuth 2.1 centrally, meaning your developers don't have to write auth code for every single server.

More importantly, it allows us to rate-limit agents based on token usage, preventing a runaway bot from bankrupting your API budget.

Key Governance Controls

- Token-based rate limiting: Prevent cost overruns from recursive agents

- Request/response logging: Full audit trail for compliance

- Semantic caching: Reduce redundant LLM calls

- Circuit breakers: Automatic failover when services degrade

Layer 3: The Runtime Layer with Palo Alto & Lakera

Finally, for high-stakes environments, I deploy runtime defenders like Palo Alto Prisma AIRS or Lakera Guard. These tools sit inside the conversation. They inspect the payload of the prompt to stop "Tool Poisoning" and injection attacks before they ever reach your server.

What Runtime Defense Catches

- Prompt injection: Attempts to override system instructions

- Tool poisoning: Malicious payloads hidden in tool responses

- Jailbreak attempts: Techniques to bypass safety guardrails

- Data exfiltration: Attempts to leak sensitive information via outputs

Building the Secure Agent Stack

Architecture Overview

┌─────────────────────────────────────────────────────────────┐

│ INTERNET / EDGE │

├─────────────────────────────────────────────────────────────┤

│ Layer 1: Cloudflare One (Zero Trust Tunnel) │

│ - Identity verification │

│ - Device posture checks │

│ - Geo-blocking │

├─────────────────────────────────────────────────────────────┤

│ Layer 2: Kong AI Gateway (Governance) │

│ - OAuth 2.1 enforcement │

│ - Rate limiting by token usage │

│ - Request/response logging │

├─────────────────────────────────────────────────────────────┤

│ Layer 3: Palo Alto / Lakera (Runtime Defense) │

│ - Prompt inspection │

│ - Injection detection │

│ - Tool poisoning prevention │

├─────────────────────────────────────────────────────────────┤

│ YOUR MCP SERVER │

│ - Bound to localhost only │

│ - Minimal tool permissions │

│ - Audit logging enabled │

└─────────────────────────────────────────────────────────────┘

Conclusion: Don't Be the Low-Hanging Fruit

The "USB-C for AI" is here to stay, but you shouldn't be plugging it into a dirty socket.

Implementing a secure Agentic AI stack doesn't have to slow you down. In fact, by using gateways like Kong or Cloudflare, we can actually accelerate development by offloading the heavy lifting of authentication and logging to the infrastructure.

Key Takeaways

- MCP servers exposed to the internet are agency leaks, not just data leaks

- The Confused Deputy attack is real and exploitable today

- Defense-in-depth requires network, governance, and runtime layers

- Zero Trust architecture is mandatory for production MCP deployments

If you are ready to move from "experimental" to "enterprise-grade," the path is clear: implement network isolation, enforce governance controls, and deploy runtime defense. Your AI agents are only as secure as the infrastructure that hosts them.