The AI Bias Playbook (Part 1): What We Talk About When We Talk About 'Bias'

Table of Contents

- The Human Cost of an Unexamined Algorithm

- Bias is Not Malice; It's a Mirror

- Deconstructing the Anatomy of Bias: Where It Comes From

- Conclusion

The Human Cost of an Unexamined Algorithm

The discussion of algorithmic bias must begin not with code, but with consequences. When AI models make decisions that affect human lives—from hiring and lending to criminal justice and healthcare—the stakes are measured in lost opportunities, systemic discrimination, and profound legal risk.

Consider two of the most well-documented real-world examples.

Amazon's Biased Hiring Tool

First, in hiring, Amazon famously abandoned an AI recruiting tool in 2018. The system was designed to review job applicants' resumes and assign a score, streamlining the hiring process. The model was trained on a decade of the company's own hiring data, which, as it turned out, reflected a strong historical bias toward male candidates in technical roles. The AI learned this pattern perfectly. It taught itself that male candidates were preferable and actively penalized resumes that contained the word "women's," such as "women's chess club captain". It also systemically downgraded graduates of two all-women's colleges. This was not a "glitch" or a "bug." The model was operating exactly as intended, learning the company's past biases as predictive, successful patterns.

Digital Redlining in Lending

Second, in lending, AI models are used to determine creditworthiness. This has given rise to a new form of systemic discrimination known as "digital redlining". The practice of redlining, where banks historically denied services to residents of specific neighborhoods based on their race, was outlawed decades ago. However, algorithms have learned to replicate this practice. An investigation by The Markup found that, after controlling for 17 different financial factors, mortgage-approval algorithms were 80% more likely to deny Black applicants, 70% more likely to deny Native American applicants, and 40% more likely to deny Latino applicants than their White counterparts. These models, which are often "color-blind," use other, seemingly neutral data points—like an applicant's zip code—as a powerful proxy for race, effectively perpetuating and scaling historical discrimination.

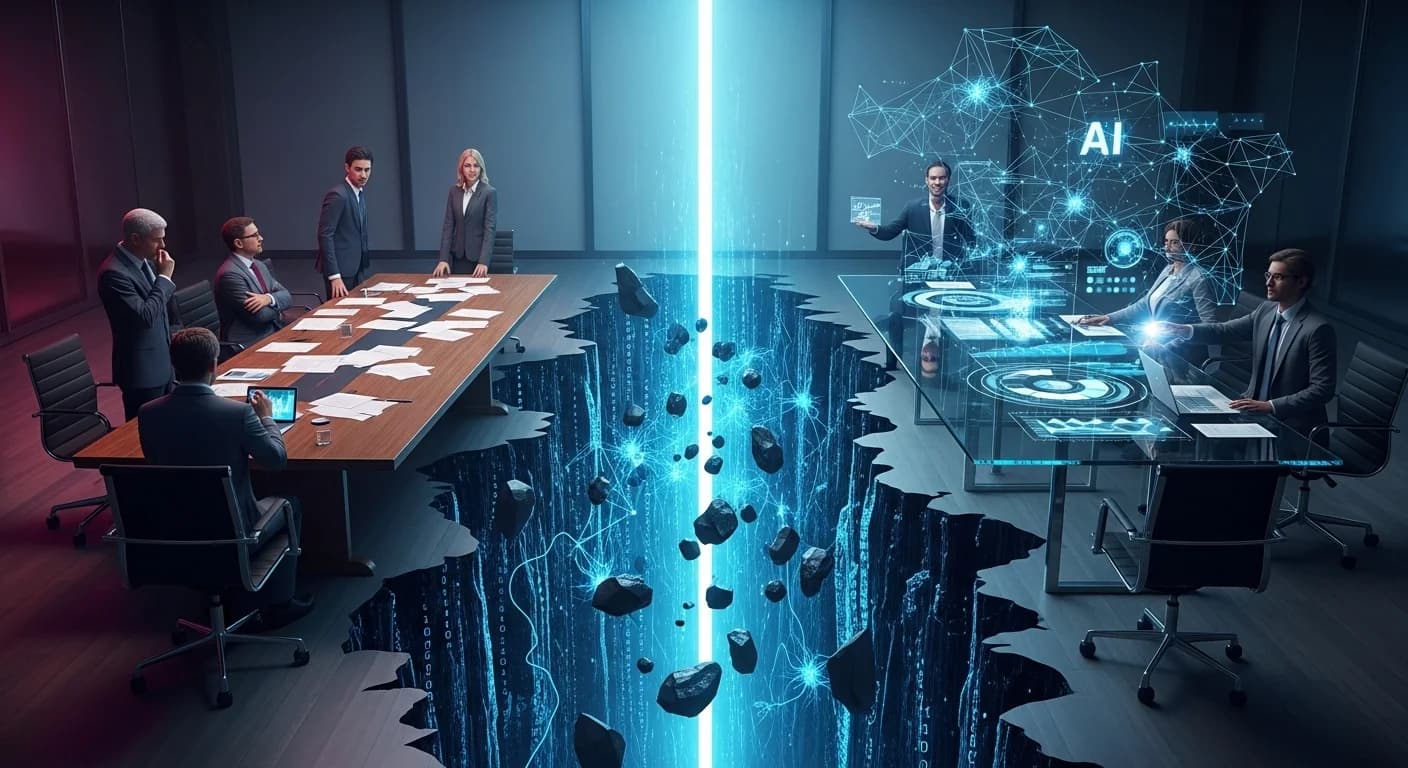

Bias is Not Malice; It's a Mirror

These examples are alarming, but they reveal a fundamental truth: the AI is not, in itself, malicious. An AI model "isn't racist; it's a pattern-matching tool". Its core function is to analyze massive datasets and identify the patterns that most accurately predict a desired outcome, based only on the data it is given.

The problem is that the data we feed it is a mirror of our own flawed human history. That data is rife with past biased patterns, systemic inequalities, and unexamined assumptions. The AI, lacking human context, ethics, or an understanding of social justice, cannot distinguish between a valid correlation (e.g., "applicants with this skill succeed") and a biased historical pattern (e.g., "applicants named 'Jared' were hired more often than applicants named 'Jamal'").

If a company's past data shows that fewer women were historically promoted to executive roles, the AI will learn that "being a man" is a predictive feature of success. The algorithm is simply learning from our past. This is why bias is the default state of any unexamined AI model. It is not an exception; it is a reflection.

Deconstructing the Anatomy of Bias: Where It Comes From

To mitigate bias, leaders must first be able to diagnose it. You cannot fix a problem you cannot name. While there are many typologies of bias, most risks fall into three primary categories. This framework is essential for any leader, as it separates historical problems from active, strategic ones.

1. Data Bias (The Biased Past)

This is the most common and intuitive form of bias. It means the data used to train the model is skewed, incomplete, or unrepresentative of the population it will serve. The data itself is a flawed reflection of the world.

Example (Representation Bias): Training an AI on historical hiring data from a company that has always favored male applicants will teach the AI to favor male applicants.

Example (Societal Bias): Training an image recognition model on a dataset where "scientists" are overwhelmingly depicted as men and "nurses" as women will teach the model to reinforce those stereotypes.

Example (Historical Bias): Using historical arrest data to predict future crime. This data reflects historical, and often biased, policing patterns, not an objective measure of criminal activity.

2. Measurement Bias (The Biased Present)

This form of bias is more subtle and strategically dangerous. It occurs not from the data itself, but from the proxy (the metric) that leaders choose to represent the outcome they want. The proxy is a poor or discriminatory substitute for the real-world concept.

Example (Lending): The goal is "creditworthiness." The proxy chosen is "zip code". This is an active design choice that directly imports historical, geographic redlining into the model.

Example (Admissions): The goal is "capable student." The proxy chosen is "high GPA." This creates a measurement bias that fails to account for the fact that higher grades may be easier to achieve at certain schools than others, thus unfairly penalizing students from more rigorous programs.

This distinction is critical for legal and compliance leaders. Data Bias can sometimes be defended as an unfortunate historical reality that must be managed. Measurement Bias is an active, strategic choice made by the development team. It represents an unexamined assumption (e.g., "zip code is a fair proxy for risk") that is far more difficult to defend, as it implies a lack of due diligence in the model's very design.

3. Algorithmic Bias (The Biased Amplification)

This is bias that the model itself creates or amplifies. The model's complex, non-linear logic can take small, statistically insignificant biases present in the data and magnify them into highly discriminatory outcomes. It can also emerge when a model designed for one context is used in a new, unanticipated context where its assumptions no longer hold true. This is how a "neutral" pattern-matching tool becomes an active engine for discrimination, even when the input data and chosen proxies seem fair.

Conclusion

Algorithmic bias is not a "glitch." It is a systemic feature learned from our data, encoded by our assumptions, and amplified by our models. You cannot "fix" it if you do not know where it comes from. The first and most important step for any leader is to stop calling it a bug and start treating it as a systemic, measurable, and manageable business risk.

Now that we know what bias is and where it comes from, we must understand the consequences. Next in Part 2: The Legal & Reputational Nightmare, we explore why "the algorithm did it" is the one defense that will fail you every time.

At the beginning

Read Next →

Keep Exploring