Engineering the EU AI Act: A Technical Blueprint for 'Compliance-by-Design'

Table of Contents

- Introduction: The Paradigm Shift from Black Box to Glass Box

- The Core Challenge: Transparency as an API Contract (Article 13)

- The AI Gateway: Your Compliance Firewall

- Engineering "The Stop Button" (Article 14)

- Immutable Logging: The "Black Box" Recorder (Article 12)

- Visualizing Trust for the End User

- Conclusion

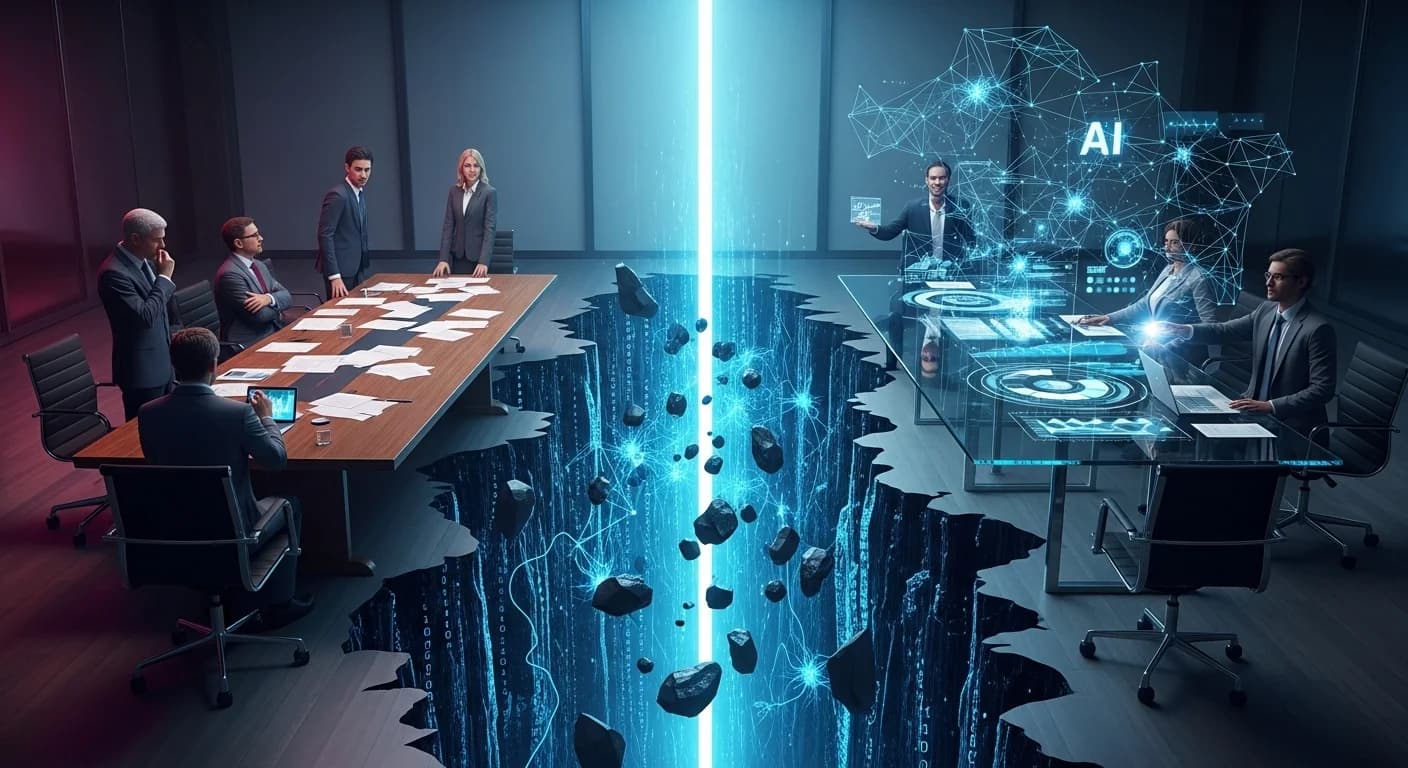

Introduction: The Paradigm Shift from Black Box to Glass Box

If you are an engineering leader in SaaS today, you are likely suffering from "regulatory fatigue." GDPR was a massive lift; now, the EU AI Act is here. But unlike GDPR, which primarily governed how we store data, the AI Act governs how our software thinks.

For engineers, this is a paradigm shift. We are moving from the "Black Box" era—where we optimized solely for accuracy and latency—to the "Glass Box" era. In this new world, transparency, explainability, and human oversight are not just product features; they are non-negotiable runtime constraints.

This post translates the dense legal requirements of the EU AI Act into concrete architectural patterns. We will explore how to build "Compliance-by-Design" into your stack using AI Gateways, immutable audit logs, and Human-in-the-Loop (HITL) state machines.

The Core Challenge: Transparency as an API Contract (Article 13)

Article 13 mandates that High-Risk AI Systems be sufficiently transparent to enable deployers to "interpret a system's output and use it appropriately."

From an API design perspective, this effectively kills the concept of a "naked" prediction. Returning a simple JSON object like {"credit_score": 720} is no longer sufficient. It lacks the necessary context for the consumer to determine if the output is reliable or if it falls outside the model's safe operating bounds.

The Compliant Payload

We need to treat transparency as part of the API contract. A compliant response object should look more like this:

{

"prediction": 720,

"meta": {

"model_version": "v2.3.1-fintech",

"confidence_score": 0.89,

"training_cutoff": "2024-01-15",

"limitations": [

"Not validated for income sources outside the EU",

"Low confidence for self-employed applicants"

]

},

"explanation": {

"id": "exp-99823-sh",

"method": "SHAP",

"top_factors": ["debt_to_income", "payment_history"]

}

}

By embedding limitations and confidence intervals directly into the payload, we force the consuming client (or UI) to acknowledge the boundaries of the system. This is not optional metadata—it is the core of your compliance posture.

The AI Gateway: Your Compliance Firewall

You do not want to reimplement compliance logic in every microservice. The most robust pattern emerging is the AI Gateway. This acts as a specialized middleware layer between your application and your inference models (LLMs or predictive models).

Think of it as a localized firewall for AI risk. It handles:

1. PII Redaction

Intercepting prompts to strip sensitive data (names, SSNs) before they hit the model context window. This is critical for both privacy compliance and reducing liability.

2. Shadow Testing

Routing a percentage of traffic to a "challenger" model to test for bias without affecting production users. This enables continuous validation without production risk.

3. Policy Enforcement

Using Policy-as-Code engines (like Open Policy Agent) to block requests that violate governance rules. For example: "Do not allow inference on users under 18" or "Block queries containing financial advice requests."

This centralization allows you to update compliance policies globally without redeploying your entire mesh. Your governance layer becomes a single control plane for all AI interactions.

Engineering "The Stop Button" (Article 14)

Article 14 is perhaps the most demanding requirement for engineers. It requires that high-risk systems can be "effectively overseen by natural persons," including the ability to "interrupt the system through a 'stop' button."

This is not just a UI widget; it is a state machine requirement. You cannot have a "fire-and-forget" architecture for high-stakes decisions. You need a Circuit Breaker pattern.

The Circuit Breaker Pattern

If a model's confidence score dips below a certain threshold (e.g., 85%), or if an Out-of-Distribution (OOD) detector flags the input as anomalous, the system must automatically divert the flow from an automated execution path to a human review queue.

This workflow requires three key components:

1. State Persistence

Store the request in a PendingReview state in a durable store like Redis or PostgreSQL. The request must survive system restarts and be queryable for audit purposes.

interface PendingReview {

id: string;

timestamp: Date;

input_hash: string;

model_output: ModelPrediction;

confidence_score: number;

ood_flags: string[];

status: 'pending' | 'approved' | 'rejected' | 'overridden';

reviewer_id?: string;

review_timestamp?: Date;

}

2. Oversight Interface

A dashboard where a human can approve, reject, or override the AI's decision. This interface must display the full context: the input, the model's reasoning, confidence scores, and any flagged anomalies.

3. Override Write-Back

If a human overrides the AI, this data point must be tagged and fed back into the training set to correct the model's future behavior. This creates a feedback loop that improves model accuracy over time while maintaining compliance.

Immutable Logging: The "Black Box" Recorder (Article 12)

When a regulator asks why your AI denied a loan three years ago, "we lost the logs" is not an acceptable answer. Article 12 mandates automatic recording of events over the "lifetime of the system."

Standard application logging (ELK stack with 30-day retention) will not cut it. You need forensic-grade, immutable logging.

WORM Storage

Use Write-Once-Read-Many storage (like AWS S3 Object Lock or Azure Blob Storage Immutability policies) to ensure logs cannot be tampered with. This provides the legal defensibility required for regulatory audits.

Cryptographic Chaining

For the highest security, chain your log entries using Merkle trees. This ensures that if a single log line is deleted or altered, the entire chain's hash verification fails, alerting you to tampering.

interface AuditLogEntry {

id: string;

timestamp: Date;

input_snapshot_hash: string; // SHA-256 of input

model_binary_hash: string; // SHA-256 of model version

output_decision: any;

previous_entry_hash: string; // Merkle chain link

entry_hash: string; // Current entry hash

}

What to Log: The Triplet of Reproducibility

It is not just error messages. You must log the triplet of reproducibility:

- The Input Snapshot (hashed for privacy, full copy stored securely)

- The Model Binary Version (SHA-256 of the exact model weights used)

- The Output Decision (complete response including confidence and explanations)

This triplet allows you to reproduce any decision years later—a core requirement for defending against discrimination claims or regulatory investigations.

Visualizing Trust for the End User

All this backend architecture is useless if the human operator is flying blind. For B2B SaaS, the Transparency Dashboard is becoming a standard deliverable.

This dashboard gives your customers (the "deployers") the visibility they need to fulfill their own legal obligations. It visualizes:

Bias Metrics

Real-time fairness metrics across protected classes (demographic parity, equalized odds, calibration). This connects directly to your monitoring pillar.

Model Drift Detection

Visualizations showing when the model's behavior deviates from baseline, triggering alerts before compliance violations occur.

Human Override Capability

That critical "stop button" we engineered earlier, exposed through a clean UI that allows authorized users to halt automated decisions immediately.

Explainability Reports

Integration with XAI techniques like SHAP and LIME to provide human-readable explanations for every decision.

Conclusion

Compliance with the EU AI Act is daunting, but it is also a forcing function for better engineering. The patterns required for compliance—better observability, rigorous versioning, and robust error handling—are the same patterns required for building reliable, high-quality software.

By adopting a "Compliance-by-Design" approach, we do not just avoid fines; we build products that are safer, more trustworthy, and ultimately more competitive in a skeptical market.

The key architectural components are:

- Transparent API Contracts: Embed metadata, confidence scores, and limitations in every response

- AI Gateway: Centralized policy enforcement, PII redaction, and shadow testing

- Circuit Breaker Pattern: State machine for human oversight with durable state persistence

- Immutable Audit Logs: WORM storage with cryptographic chaining for forensic defensibility

- Transparency Dashboard: User-facing visibility into bias metrics, drift, and override controls

The era of "move fast and break things" is over for AI systems. Welcome to the Glass Box era.

Related Reading: