The AI Bias Playbook (Part 7): Building a Culture of Fairness

Table of Contents

- Series Recap: The Tools Are Not Enough

- The "Three-Legged Stool" of Defensible AI Governance

- The Governance Loop

- Conclusion

- Related Series

Series Recap: The Tools Are Not Enough

Over the last six parts, we have built a comprehensive playbook. We've defined bias as a systemic, learned feature, not a "glitch" (Part 1). We've seen the staggering legal and financial consequences of failure (Part 2). We've learned to make the critical policy decision about which fairness metric to use (Part 3). And we've explored the three essential types of technical guardrails:

- Pre-Deployment Testing (Part 4)

- At-Runtime Guardrails (Part 5)

- Post-Deployment Monitoring (Part 6)

But this playbook, and all the technology it describes, is useless without a human-centric framework to manage it. You cannot buy a platform and "solve" fairness. True, defensible, and ethical AI governance is a combination of People, Process, and Platform.

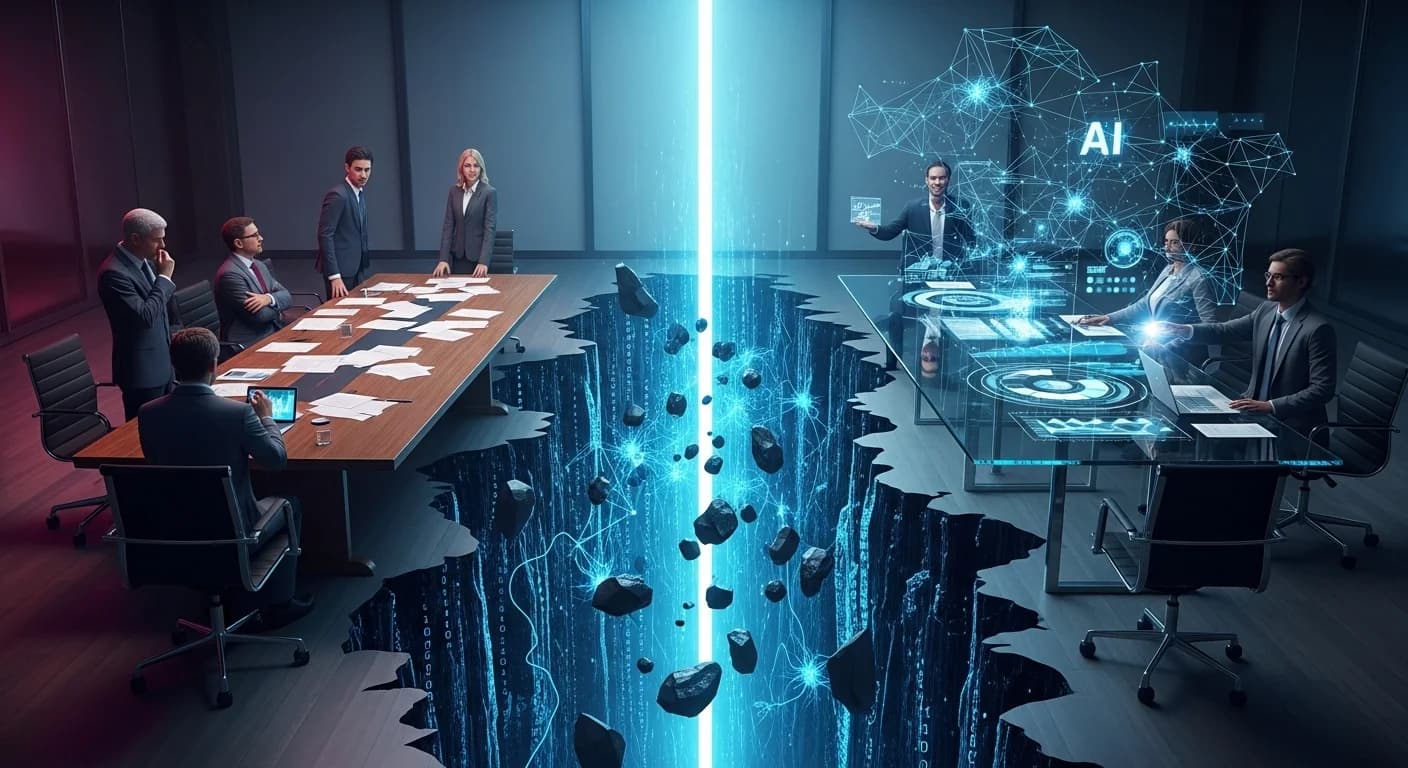

The "Three-Legged Stool" of Defensible AI Governance

This "three-legged stool" is the final, actionable framework for leaders. If any leg is weak, the entire structure will collapse.

1. PEOPLE: Diverse Teams & The Blind Spot Problem

This is the first and most important leg. "Homogenous teams will have homogenous blind spots".

If the team building, testing, and "red-teaming" your AI is composed entirely of people from similar backgrounds, they will, by definition, have shared and unexamined assumptions. They will not see the biases, stereotypes, or cultural nuances that are obvious to those from different backgrounds.

The Amazon hiring tool that penalized "women's colleges" and the Google Gemini debacle that failed to handle historical racial contexts are quintessential examples of this. These were not just technical failures; they were failures of imagination and perspective.

Diversity is not a "nice-to-have" HR initiative. In the age of AI, diversity of gender, ethnicity, cultural background, and cognitive experience is your single most effective risk mitigation strategy.

2. PROCESS: The AI Governance Committee

This is the formal process for owning, managing, and accepting risk. You cannot have distributed, "ad-hoc" governance. You must create a formal, centralized "AI Governance Committee" (AIGC).

Composition

This committee must be cross-functional. It is not just an "AI ethics" or "tech" committee. It must be empowered with real authority and include leaders from:

- Legal

- Ethics and Compliance

- HR (for employment systems)

- Privacy & Data Protection

- Information Security

- Product Management

Role and Mandate

This committee is not just advisory. It owns the risk and has a clear mandate:

Own the Policy: It makes the formal policy decision on which fairness metrics to use (from Part 3).

Own the Gate: It reviews the pre-deployment test reports and makes the final, documented "Go / No-Go" decision for any new high-risk model (from Part 4).

Own the Drift: It receives and reviews the "Fairness Dashboard" reports from post-market monitoring (from Part 6) and is responsible for deciding when to intervene and retrain a drifting model.

3. PLATFORM: The Technology That Enables Oversight

This is the "Platform" leg. This is the technology that enables your People and your Process to function efficiently and at scale. Your committee cannot manually review every AI decision. Your diverse teams cannot manually test every possible outcome.

The Platform consists of the tools that automate governance. This includes:

- The data auditing and pre-processing tools

- The "sandbox" environments for fairness metric testing

- The "at-runtime" guardrail systems for LLMs

- Most critically, the continuous monitoring platforms that produce the "Fairness Dashboards" your Process depends on

The Governance Loop

AI bias is a fundamentally human problem, amplified by technology. The solution must, therefore, be human, empowered by technology.

These three legs—People, Process, and Platform—are not static. They form a continuous, self-correcting governance loop. This loop is the only defensible, ethical, and profitable path forward.

- Your diverse People build, test, and "red team" a new model

- Your formal Process (the AIGC) reviews the test reports and makes a "Go" decision

- The Platform (the monitoring dashboard) continuously watches the model in production

- The Platform alerts the Process (the AIGC) the moment "bias drift" is detected

- The Process (the AIGC) tasks the People (the dev team) with investigating, mitigating, and re-deploying the fixed model

This continuous cycle is the ultimate goal. It is a living, breathing system of accountability. It creates the documentation that proves your due diligence, protects your customers from harm, and defends your organization from the legal and reputational nightmares of an unexamined algorithm.

Conclusion

This is the complete AI Bias Playbook. You now have:

- The understanding of what bias is and where it comes from

- The awareness of the legal and financial consequences

- The framework for choosing your fairness metric

- The guardrails to test, filter, and monitor for bias

- The organizational structure to sustain it all

The tools exist. The frameworks are proven. The only question is: will your organization have the courage to use them?

Related Series

For a comprehensive view of AI governance beyond bias, explore our companion series:

The AI Playbook (Part 1): The AI Compliance Tsunami — Understanding the new wave of global AI regulation and why your old governance playbook is obsolete.

← Read Previous

Continue Your Journey

Reached the end